Real-time Neural Woven Fabric Rendering

Xiang Chen, Lu Wang#, Beibei Wang#

Proceedings of SIGGRAPH 2024

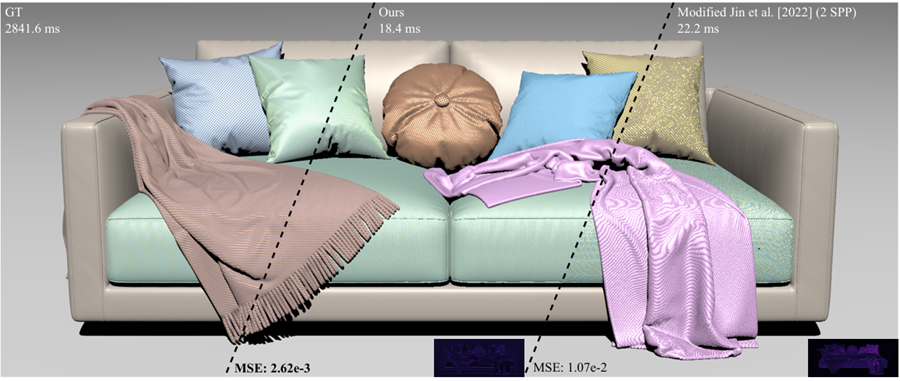

We present a neural network for real-time woven fabric rendering. In this Sofa scene, we provide eight different patterns of woven fabrics. Our method can represent several typical types of woven fabrics with a single neural network, which achieves a fast speed and a quality close to the ground truth.

Abstract

Woven fabrics are widely used in applications of realistic rendering, where real-time capability is also essential. However, rendering realistic woven fabrics in real time is challenging due to their complex structure and optical appearance, which cause aliasing and noise without many samples. The core of this issue is a multi-scale representation of the fabric shading model, which allows for a fast range query. Some previous neural methods deal with the issue at the cost of training on each material, which limits their practicality. In this paper, we propose a lightweight neural network to represent different types of woven fabrics at different scales. Thanks to the regularity and repetitiveness of woven fabric patterns, our network can encode fabric patterns and parameters as a small latent vector, which is later interpreted by a small decoder, enabling the representation of different types of fabrics. By applying the pixel's footprint as input, our network achieves multi-scale representation. Moreover, our network is fast and occupies little storage because of its lightweight structure. As a result, our method achieves rendering and editing woven fabrics at nearly 60 frames per second on an RTX 3090, showing a quality close to the ground truth and being free from visible aliasing and noise.

Downloads

BibTex

@inproceedings{Chen:2024:NeuralCloth,

title={Real-time Neural Woven Fabric Rendering},

author={Xiang Chen and Lu Wang and Beibei Wang},

booktitle={Proceedings of SIGGRAPH 2024},

year={2024}

}